SeaWolf is an application for coastal navigation boaters and will be launched in early August exclusively on Windows 8, then on Windows Phone 8 later this year. The goal for SeaWolf is to provide information about water depths, aids to navigation and other information that that is helpful for boaters to safely navigate coastal waters of the United States. To provide this information SeaWolf leverages Bing Maps and a custom tile layer to overlay generated tiles over a Bing map. The following graphic from MSDN gives you an idea of these tiles are organized:

Bing Maps Tile System (from MSDN)

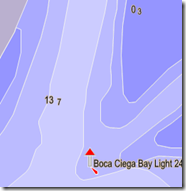

The graphic above represents only 3 levels and you can see how the number of tiles significantly increases with each level. With each level the number of tiles increases by a factor of four. To provide the level of detail required to be helpful to boaters, we need to go 16 levels. The following tiles are from levels 9, 10, 12, and 16 respectively. You can see as you move in the level of detail gets greater. (you may recognize this area as near Tampa)

So now you can see the challenge, if we want to provide an acceptable level of detail anywhere on the United States Coastal Areas it will require a well into the millions of tiles. For a company like Microsoft that has significantly more resources that I do, creating theses tiles across the globe is a large task, yet doable. For a small company like Software Logistics doing something like this, even for a subset of the world (US Coastal Regions) could be viewed as an overwhelming task, or at least a very expensive one.

Enter Azure

For version one of the strategy for generating these tiles, Azure Blob Storage storage was used. As you could probably guess from above, pre-generating these tiles really wasn’t an option. At least one that couldn’t be done on a regular basis to keep the charts up to date. So the approach that was taken here was simply have the SeaWolf client ask a custom HTTP Handler to grab a tile. The code on the server then asked Azure Blob Storage for the tile, if it existed, it would simply forward it down to the client. If not it would spin up a process to load the map information and generate the tile on the fly. Once it generated it, the process would upload it back to the cloud so it would be ready for the next request and wouldn’t have to spend the CPU cycles generating it twice. This process also sent the tile to the SeaWolf client.

Route 1 – Tile Exists

Route 2 – Tile Does Not Exist

This process worked well and without too much load performance wasn’t too bad. Generating tiles takes anywhere from 50ms to 2-3 seconds for complicated areas. Again this is only necessary the first time the tile is requested. The problem with this approach is it just doesn’t scale if the tile doesn’t exists, once we had 3-4 people testing at the same time in new areas you could tell it was just taking too long to generate the tiles. My first option was thinking that we could simply spin up a “server-farms” worth of Azure Web Roles. This certainly could work but well, as a small company this could get relatively expensive.

The Real Problem

The real problem is the server component - as long as I can’t efficiently and cost-effectively pre-generate the millions of tiles I’m going to have to have a server that requests the tiles and generates/download the tiles if they don’t exists. If I can get rid of the server component, and have the SeaWolf family of clients go directly against Azure Blob storage I can have something that would scale to level of my hopeful SeaWolf app sales. This could be done without having to maintain a rack full of servers or have a very expensive Azure Web Role invoice each month. The problem with pre-generating the tiles to allow SeaWolf to go directly against Azure Blob is the incredibly large number of tiles that need to be generated.

The Real Solution

Enter Azure Again – so at this point I have a server side component that can take as input the coordinates of the tile and generate a 256x256 PNG tile that can be overlaid on a Bing Map. I even had a console app that will iterate through the levels and generate blocks of tiles at a time. Running this on my 3.8GHz quad core i7 with 16GB RAM wasn’t too bad, but it still was taking a long time to generate the blocks and even longer (and a lot of network traffic) to upload these tiles. Besides, I needed my desktop to do other things, like write code. My first idea of running multiple azure instances to generate and serve tiles on demand was really cost prohibitive, however spinning up a considerable number of azure instances in parallel to generate these tiles, and then take them off line after the generation has been completed is certainly something that would be very cost effective.

What I needed was to spin up a worker role that would iterate through the levels and very quickly upload those tiles back to Azure. This worked well since I can specify the data center for both the blobs and the worker roles. I’m not all that familiar with the Microsoft Data Centers, but I expect the pipe between the servers and the storage is more than large enough to handle this goal.

Adapting the console app to the worker role was very easy and only took a few lines of code.

My next challenge was some way to allow for scheduling and managing the groups of tiles that need to be generated. For the initial generation I decided to go from level 10 to 16, this doesn’t seem like all that much however with the 600+ tile groups I needed to generate, I did a back-of-the-napkin estimate and figured it was going to generate 2.5 million tiles. You can see from the example of a level 16 tile, the information provided is more than adequate for the sailor.

To do the scheduling I created an Azure Queue. Then in then worker role, pulled an item off of the queue and started processing. Once it finished, it grabbed another item. If there were no additional tile groups to be generated the instance went to sleep (of course it was still running and accumulating costs).

It took me a good morning to setup the above process including a Windows Azure SQL Database to report and manage status. The problem at this point was the management and watching the status. Although the tile generation software is fairly robust and stable with the sheer quantity of tiles we are generating there is some sort of random failure that occurs (not sure if this is in GDI+ of how I’m using). A .001% failure rate will happen. Some process needed to be also put in place to watch status and restart stuck/failed instances. The following WPF app was created to manage this process and track status:

As each worker role processed the tile groups, it role updated the SQL Server as to the status and number of tiles generated (at intervals of 100). This app simply executed a query against the SQL Server and had a simple mechanism to re-queue failed generations.

As a legend, the blue squares are tiles that are queued to be generated, the green have been generated. Yellow is in process with the opacity of the yellow showing how far along in the process that tile group is. The red square is a failed generation. On the right side we are tracking individual worker roles with bar graphs showing progress. The bottom on the right side shows idle worker roles.

So how did it work?

The process was started at around noon with 15 worker roles running in parallel as I was perfecting this process. At around 3PM, I added a new subscription so I could spin up 20 additional cores (note there is a 20 core limit per Azure subscription). By 8PM that night there were 2.5M+ tiles generated and all my tile groups had been successfully processed. So in summary it took about 8 hours when all was said and done with the number of instances running, storage used, network traffic my bill for doing all this work was under $30. Not at all a bad cost for that much computing power. Azure gives companies like mine the capability to complete with organizations many times my size. It really is a game changer for the little guy who doesn’t want to stay that way.

As part of this I learned that some areas such as Key West, Long Island and Boston Harbor took significantly longer than other areas. In some cases up to 2 hours to generate and upload the 5620 tiles that make up tile group. By comparison most groups took between 5 and time minutes. These long-running generations happened at the tail end of the process so for the last 2 hours only 3-4 tile groups were being generated. I have metrics in the database about how long each tile group took, so in the future when I do a re-generation, I’ll start with the long ones first. I also learned that if you contact Azure support you can have them increase the number of cores available to your subscription. I had them increase the count to 100. I would expect that with my new strategy of starting with the long running areas first, having the bugs worked out of the systems and having 100 parallel instances running at the same time, I’ll be able to generate all the necessary tiles in less that two hours. This allows SeaWolf to have fresher maps with a fraction of the cost of any other approach.

Stay tuned for more on SeaWolf and Windows 8

-twb

No comments:

Post a Comment